CPS Evaluation Strategy 2025

- Foreword by Baljit Ubhey, Director of Strategy and Policy

- The Crown Prosecution Service (CPS) context

- Evaluation in the CPS to date

- Vision and Goals

- Proportionate evaluation: our approach to prioritisation

- Credible, consistent, and co-ordinated: our approach to governance

- Robust: Our approach to how we will evaluate

- Planned: Our commitment to publish an Evaluation Plan

Foreword by Baljit Ubhey, Director of Strategy and Policy

I am proud to be able to present the Crown Prosecution Service’s first Evaluation Strategy.

This is published during challenging times for us all. The pandemic has caused significant disruption to the services we provide across the Criminal Justice System, and we are committed to recovery from that. There has been increasing public scrutiny of our work, especially the time taken to charge offenders, and the degree to which convictions are obtained. Meanwhile there is pressure on all public spending.

In the CPS we value fairness, objectivity and transparency. It is right that we should be held to account for what we do, and it is right that we should be able to demonstrate that we spend public money well.

Evaluation therefore sits well with our core values. This Evaluation Strategy represents a commitment to collect and use high quality evidence in our decision making about policies and practice. Good evaluation will provide us with critical feedback to help us check that we are doing the right things, adjust our course if necessary, and demonstrate where we have added value.

Specifically, in this Strategy we commit to proportionate, credible, and robust evaluations. They must be proportionate so that where we spend significant sums of public money the public can be sure we will evaluate the impact. They must be credible, consistent, and coordinated, so we will embed evaluation into our business planning, and create new governance structures to assure our evaluations. They must be robust, and so we will follow government best practice in the way in which we conduct them.

Following on from this Strategy we will publish an Evaluation Plan setting out which programmes we intend to formally evaluate and report, including our priorities, and those of HM Treasury. This will be drafted in accordance with the principles and governance set out here.

We will enhance our evaluation practice and embed this in our business planning process and in our culture so that it becomes simply the way we do things.

The Crown Prosecution Service (CPS) context

The Crown Prosecution Service (CPS) is a non-ministerial department. The CPS prosecutes criminal cases that have been investigated by the police and other investigative organisations in England and Wales. The CPS is independent and makes decisions independently of the police and government.

The CPS decides which cases should be prosecuted; determines the appropriate charges in more serious or complex cases and advises the police during the early stages of investigations; prepares cases and presents them at court; and provides information, assistance and support to victims and prosecution witnesses.

The CPS is led by the Director of Public Prosecutions and the Chief Executive Officer. The CPS forms part of the Law Officers’ remit. The Attorney General’s Office has overall responsibility for:

- The Crown Prosecution Service (CPS)

- Her Majesty’s Crown Prosecution Service Inspectorate (HMCPSI)

- The Government Legal Department (GLD)

- The Serious Fraud Office (SFO)

The CPS is divided into 14 regional Areas, each with a Chief Crown Prosecutor. There are 3 Directors of Legal Services. The rest of the senior leadership team comprises the Director of Strategy and Policy; the Director of Communications; the Chief Finance, People, and Digital and Information Officers.

In terms of size and funding, the CPS total resource spending in 2020-21 was £567 million.1

We intend to develop our evaluation strategy and future evaluation plans in line with others across government and are keen to benchmark our progress against similarly sized and resourced bodies. For context, as of 31 March 2020, the nearest comparator arms’ length bodies in monetary terms to the CPS include the scientific research arm of the Ministry of Defence, the Defence Science and Technology Laboratory, at £706 million; the National Crime Agency, at £485 million; and Arts Council England at £465 million. For illustration, the Ministry of Justice’s (MoJ) overall resource expenditure was £8.2 billion, making CPS spend under 1% of that of the MoJ as a whole. This analysis also found that at the end of March 2020, the CPS had 5,744 staff. Comparable arms’ length bodies’ workforces in terms of size were the Driver and Vehicle Licensing Authority, at 5,449; and HM land Registry, at 5,184. Again, for illustration, the MoJ workforce overall for the period was 69,236.2

Although the CPS has commissioned evaluations of core programmes and interventions, it has not previously published an Evaluation Strategy.

Evaluation in the CPS to date

Understanding the efficiency and effectiveness of all our programmes, projects and interventions is crucial to effective decision-making. Evaluation is crucial in developing this understanding and is defined as the systematic assessment of the design, implementation, and outcomes of an intervention.3

Best practice in developing evaluations and appraisals of interventions is set out in HM Treasury’s Green and Magenta Books, which provide an overview on how to appraise and evaluate policies, and what to consider in evaluation design, respectively.

The responsibility for Evaluation within the CPS lies with the Strategy and Policy Directorate (SPD). This is a relatively new directorate having been established in 2019 and is also responsible for the development and delivery of policies and strategies across the CPS, working in collaboration with partners across the Service, Whitehall and beyond.

SPD runs a rigorous annual planning cycle across the organisation to develop and design our corporate Business Plan. This Plan captures high priority work from across the organisation to bring together a change portfolio to deliver our CPS 2025 Strategy.

Over the last 2 years SPD staff have been working to incorporate best practice from the Magenta Book and from Other Government Departments in our business planning process. For example, the Magenta Book states that the development of a Theory of Change should be the first step in any evaluation. We have therefore included a Theory of Change process to capture the rationale for projects within the CPS business plan, including anticipated outputs, outcomes, impacts and success measures.

This process enables the CPS to ensure all its priority work is co-ordinated and supports delivery of our organisational strategy, whilst also laying the necessary foundations for evaluation of the implementation and outcomes of our high priority projects.

In addition to this work internally to enhance our planning, we also work closely with the Cabinet Office to support the Outcome Delivery Plan process in line with the rest of Government.

The CPS Priority Outcome Delivery Strategy demonstrates how our work aligns with Government priority outcomes on:

- improving public safety by delivering justice through independent and fair prosecution; and

- enhancing the effectiveness of the criminal justice system through timely prosecutions and improved casework quality.

This covers our most relevant projects and programmes of work from across the organisation, including, as outlined in the Spending Review 21:

-

Support our work around rape and serious sexual offences (RASSO)

- a. The Rape and Serious Sexual Offences Joint National Action Plan and the Rape Review, and the associated RASSO Performance Framework

- b. Operation Soteria

-

Respond to pressures such as police officer recruitment and the court backlog

- a. Strategic Workforce Planning;

- b. Operational Recovery;

- Reform our service to victims

-

Invest in digital innovation

- Future Casework Tools and Digital Innovation.

Each of which makes a specific and direct contribution to our priority outcomes.

In addition to this rigorous approach to planning, we have also been investing significant time and energy in our evaluation and assessment of our performance and delivery.

However, in addition, we acknowledge that there is the potential for improved governance and co-ordination in our planning and evaluation work. This Evaluation Strategy, and its accompanying Evaluation Work Plan to follow, are an opportunity to take stock and recalibrate our evaluation capability in line with best practice and guidance as set out in HM Treasury’s Magenta Book: Central Government Guidance on Evaluation.

Vision and Goals

The CPS plays a key role in the Criminal Justice System, ensuring fairness and justice. We are accountable for how we spend taxpayers’ money, and it is important to the CPS that we spend money wisely and achieve the best possible value from what we do. Evaluation provides us with critical feedback, to help us check that we are doing the right things, adjust our course if necessary, and demonstrate where we have added value.

Our overarching CPS Strategy 2025 consists of five main strategic aims, and our Evaluation Strategy aligns with these.

| CPS 2025 Theme | Strategic Aims | Evaluation Strategy |

|---|---|---|

| Our People | Our people have the skills and tools they need to succeed | Our staff understand the importance of evaluation in our work, and consider this at an early stage when developing policies or new ways of working |

| Digital Capability | We use data to drive change | We maximise the potential of our systems and data to facilitate monitoring and evaluation of all our key projects |

| Strategic Partnerships | We influence cross-CJS change through trusted relationships | We work in collaboration with partners across the CJS to ensure we have a consistent approach to evaluation, and share evidence on which to evaluate our performance |

| Casework quality | Cases are dealt with effectively | Our evaluations are designed enhance service delivery by enabling us to better understand how policies and ways of working impact our casework performance |

| Public Confidence | The public understand our value | Our evaluations are transparent and clear so that the public have confidence in our commitment to improve performance, and our willingness to be held accountable |

We know that good monitoring and evaluation will help us to:

- learn lessons, especially about what works best; and

- demonstrate accountability.

To these ends, we aim to evaluate – as appropriate:

- whether policies and interventions achieve their targeted outputs, outcomes, and impacts;

- whether those people we expect to benefit, do;

- whether there are unintended consequences;

- whether further improvements are possible;

- whether the context has affected the outcomes;

- whether the intervention is value for money; and

- what stakeholders think about our actions.

Evaluation with the purpose of learning about what works helps us to manage risk and avoid poor decision making. It provides better understanding of who can be helped by what measures. It also informs policy development and decisions.

Evaluation for reasons of accountability ensures we are transparent about how our money is spent and assesses whether we have made sound value for money decisions.

In common with others across government departments, we aim to achieve our learning and accountability through a combination of three types of evaluation:

- Process evaluations: to examine activities during implementation, and which can help us learn and adapt what we are doing, to improve outcomes.

- Impact evaluations: to measure outputs, outcomes, and impacts, assessing what tangible differences we have achieved.

- Value for Money evaluations: to assess whether our interventions provide good value for money.

Our vision is to deliver evaluation that is:

- Proportionate: prioritising the evaluations that are most needed.

- Credible, consistent, and co-ordinated: ensuring good governance.

- Robust: so that when we do evaluate, we do it well.

- Planned: so that stakeholders can hold us to account.

We are not yet in a position where that vision is entirely realised. In this strategy we are able to set out these four broad principles for how we intend to deliver evaluations.

Proportionate evaluation: our approach to prioritisation

Evaluation of government funded programmes is a priority in order to achieve value for money for taxpayers. Evaluation should be proportionate to the scope and scale of the programme or policy, and the CPS is committed to putting governance structures in place to ensure that we undertake evaluations appropriately.

As noted earlier, the positive returns from conducting evaluation can be broadly categorised as facilitating learning, or accountability. It follows that evaluation is unlikely to be cost-effective if it either does not add to the existing evidence base, or if the cost of evaluation is disproportionate to the size of the initiative. Conversely, evaluation is likely to be especially helpful where programmes or policies are particularly novel or ambitious.

A number of departments have set out the principles they use to prioritise evaluations. (For example, The Department for Transport4 and the Department for Business, Energy, and Industrial Strategy (BEIS)5 have done so).

Our aim is to be as consistent as possible with these approaches across Government, while adapting them to recognise the unique role of the CPS and ensuring that our Evaluation Strategy is in keeping with our overall CPS 2025 Strategy.

In light of this, when deciding whether it is proportionate to conduct evaluations, we will have regard to the following considerations:

- the monetary value of the investment in the initiative / policy change;

- whether evaluation is an important part of determining whether policy / programmes are successful in helping meet statutory obligations such as Public Sector Equality Duty;

- whether a specific evaluation has been directed by government (for example during the Spending Review);

- the degree of risk associated with an intervention;

- whether evaluation would contribute to the evidence base (and potentially enable analysis that would inform other programmes / initiatives);

- our accountability to the public: specifically, the need for transparency, and the need to consider public expectations with regard to evaluation.

In certain contexts, similar principles have been translated into decision criteria with firm cut-off points. (For example, in some departments all programmes over a certain monetary value are evaluated). For the most part, it is not possible to set criteria that enable decision making based on fixed number-based rules. We recognise that adherence to the principles above will be a matter of judgement. It is therefore important that we set out appropriate governance procedures that stipulate:

- at what key points during the lifecycle of an initiative decisions are taken as to whether evaluation is necessary;

- by whom these decisions should be made; and

- in what fora.

It is important to note that while the CPS is responsible for driving forward its own strategy and work programme, it sits within the wider Criminal Justice System. Some monitoring and evaluation activities will be led by, or delivered in collaboration with, others. We hope that the publication of all the Evaluation Strategies across the criminal justice system will further our already constructive dialogue with others across the system and enable the production of co-ordinated Evaluation Plans as the next key stage of this process.

Credible, consistent, and co-ordinated: our approach to governance

Government departments with successful evaluation programmes have achieved consistency and co-ordination by embedding the requirement for monitoring and evaluation into their corporate governance structures. This means using the skillsets that are appropriate and allocating decision making responsibilities commensurate with other role-based responsibilities.

Credibility is often achieved through ensuring a degree of objectivity.6 This can be achieved in several ways: for example, by ensuring that those who conduct or commission the evaluation is separate from the delivery team designing the intervention; through using respected external suppliers; and through peer review of the design and outputs of the evaluation work.

We intend to follow these examples.

Our approach to governance will be focused on three key aspects of our evaluation activities:

- the decision whether to evaluate;

- quality assurance of the evaluation itself, including the method chosen and the accuracy of analysis; and

- the monitoring of CPS activities related to evaluation findings. This will include carry forward of lessons learned into future policies and activities.

Decision Timing Decision maker/responsibility Assurance criteria Information needed The decision to conduct an evaluation Annually, during the Business Planning process The CPS Benefits Realisation Group Has this been determined using the key CPS principles for evaluation? Theory of Change process to be followed by Policy teams, and information provided to decision makers Quality assurance of the evaluation Quality assurance should be undertaken as a minimum of the evaluation design and again on conclusion of the evaluation. For larger scale evaluations regular quality assurance should be built into the project design. Overseen by the Research/Evaluation Lead Has the evaluation design been achieved to the requisite level (for example using the Nesta framework as a guide); are the methods appropriate (using Magenta Book); has the data collection and analysis been appropriate; are the conclusions drawn supported by the data? Evaluation data and analysis and reporting Monitoring implementation of evaluation lessons Post-evaluation The CPS Benefits Realisation Group Evaluation reports and recommendations

Robust: Our approach to how we will evaluate

40. We have identified good practice in other government departments, and we seek to ensure that, once a decision has been taken to evaluate, we will:

1. Set out our rationale for how an intervention will work.

We will set out objectives and anticipated effects using a Theory of Change approach that consists of several steps.

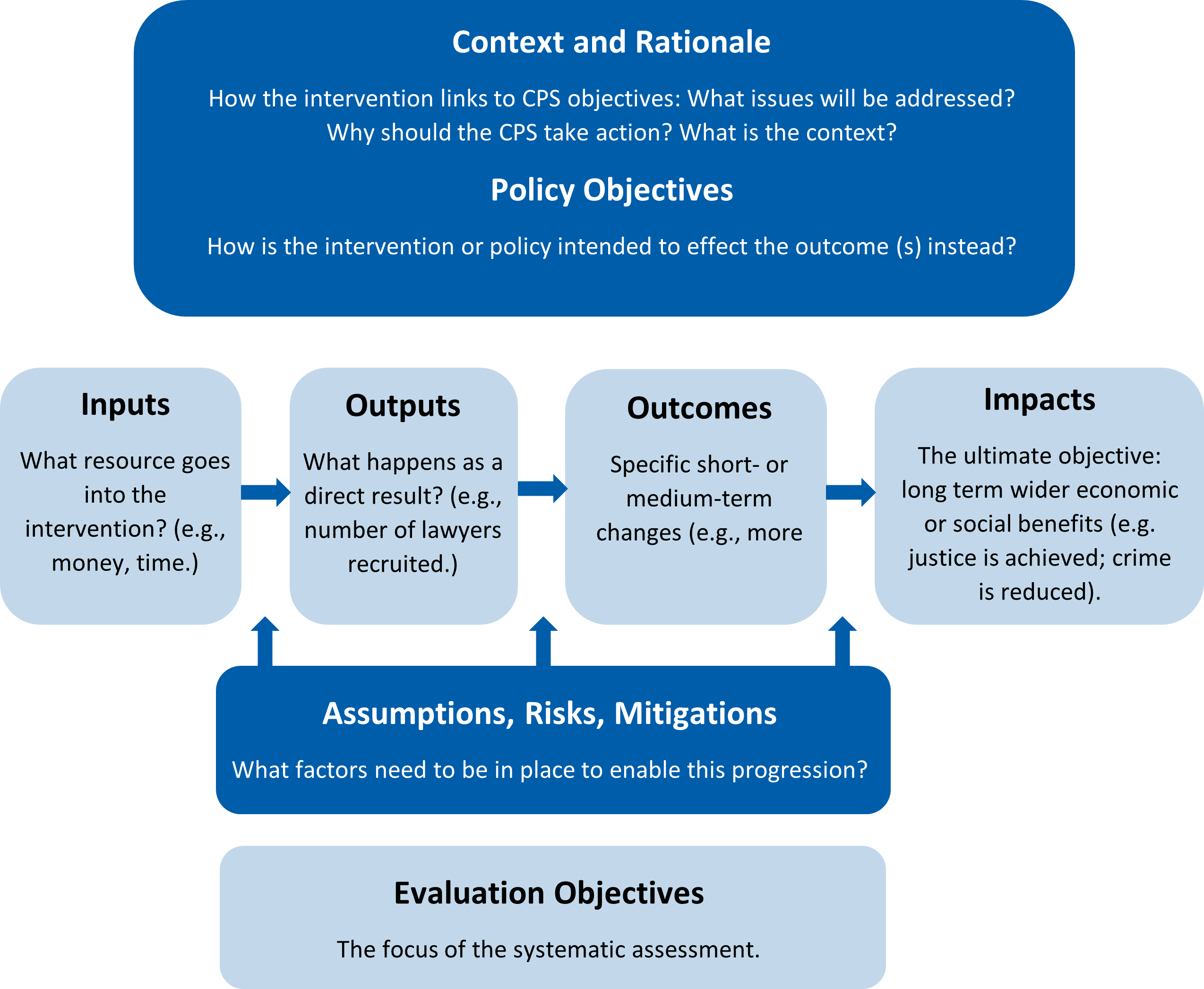

Description of diagram:

- First, we set out the context and rationale. This will include questions such as how does this intervention link to Crown Prosecution Service objectives? What issues will be addressed? Why should the CPS take action? What is the context?

- We also set out the policy objectives. How is the intervention or policy intended to effect the outcomes intended?

- Next, we set out the required inputs, and intended outputs, outcomes, and impacts of the intervention or policy.

- Inputs are the resource that goes into the intervention. Examples might be additional money and time.

- Outputs are what happen as a direct result of that input. For example, an output of providing additional money to the CPS might be that additional lawyers are recruited.

- Outcomes are the specific short- or medium-term changes that occur. For example, an outcome of recruiting more lawyers might be that more cases are processed.

- Impacts are the ultimate objectives. These are the longer term wider economic or social benefits. For example, the impact of processing more cases might be that justice is better achieved and that crime is reduced.

- In addition to setting out the inputs outputs outcomes and impacts we will also set out the assumptions risks and mitigations these consider what factors need to be in place to enable the progression all the way from inputs to impacts.

- The evaluation objectives are the focus of the systematic assessment.

2. Choose the evaluation approach and define what questions to answer.

We will assess what level of evaluation is needed, identify the questions to be addressed during the evaluation, the data required, and the methods needed.

The CPS will use the Magenta Book to guide our choices of evaluation approach. We will deploy experimental, quasi-experimental, and theory-based evaluations as appropriate.

The method used must be sensitive to the context. It will not always be possible to use experimental methods or control groups, for example if it would be unethical to deny a control group access to an intervention that has a very high chance of creating better access to justice.

As outlined as best practice by the Cabinet Office Evaluation Task Force, we will use the Nesta Standards of Evidence as a tool to challenge our thinking regarding evaluation, and we will aim to evaluate at the highest level practicable.

The Nesta Standards of Evaluation are:

| Level | Demonstrated by: | Likely evidence gathering type |

|---|---|---|

| Level 1 | The provision of a logical reason why an intervention could have an impact, and why that represents an improvement on the current situation. | Existing data, research, and theory. |

| Level 2 | Data showing change for those receiving the intervention. | Pre and post interventions surveys; panel studies. |

| Level 3 | Data that allow conclusions to be made regarding causality: for example, showing impact for those receiving the intervention, and no impact for a control group. | Methods including experimental design and use of control groups. |

| Level 4 | Data that explains why the intervention has an impact. An independent evaluation for validation purposes. An evaluation demonstrating the cost- benefits are worthwhile and the intervention could reasonably be rolled out to multiple locations. | A robust independent evaluation that investigates and validates the nature of the impact. Documented standardisation of delivery and processes. Data on costs and benefits. |

| Level 5 | Data that demonstrates the intervention could be operated elsewhere and scaled up. | Multiple replication evaluations; future scenario analysis; fidelity evaluation. |

3. Conduct the evaluation

We will conduct or commission the evaluation. The choice as to which will be determined by reference to our principles, set out above.

We will also consider capacity and capability, and the need for independence. Evaluations should be commissioned, delivered and quality assured by persons independent of the intervention design team, and with appropriate technical skills.

4. Publish and use the findings

We will follow the guidance in the Magenta Book, and take into account the GSR Publication Protocol7, which provides cross-government advice on the publication of research, including evaluation.

45. As a non-departmental government body, we are not obliged to follow this protocol: however, it provides a statement of good practice which we will adhere to wherever practicable. Exceptions might be when information is especially sensitive or where disclosure might compromise the efficacy of continuing an intervention.

- Principle 1: The products of government social research and analysis will be made publicly available.

- Principle 2: There will be prompt release of all government social research and analysis.

- Principle 3: Research and analysis must be released in a way that promotes public trust.

- Principle 4: Clear communication plans should be developed for all social research and analysis produced by government.

- Principle 5: Responsibility for the release of research and analysis produced by government must be clear.

We understand that the Evaluation Task Force ETF will be releasing an Evaluation Registry as a digital service on gov.uk, which will support departments in being able to publish their evaluations in a central location, improving their visibility. Once it is released, we will make use of the Evaluation Registry on gov.uk to improve visibility of our evaluations.

Planned: Our commitment to publish an Evaluation Plan

We will publish an Evaluation Plan setting out which programmes we intend to formally evaluate and report, including our priorities, and those of HM Treasury. It is important that the CPS Evaluation Plan is drafted in accordance with the principles and governance set out in this Strategy document.

We will include:

- details of expected evaluation methodologies;

- broad timelines; and

- how evidence generated is expected to be used.

We hope to publish our Evaluation Plan early in 2023. Some of these priorities will be multi-year, and as such this Plan may be refreshed periodically and as necessary. However, this plan will guide work internally on an ongoing basis, and the requirements to deliver against it will be factored into our broader corporate planning across the organisation.

- CPS Annual Report and Accounts 2020-21

- The latest Public Bodies Directory from the Cabinet Office was published in 2020 and provides an overview of all organisations classified as public bodies, including non-ministerial departments, like the CPS.

- HMT Magenta Book, p11

- Department for Transport Monitoring and Evaluation Strategy, 2013.

- BEIS Monitoring and Evaluation Framework, BEIS Research Paper Number 2020/016.

- Magenta Book, 1.9

- Government Social Research. Publishing Research and analysis in Government. Government Social Research Protocol (2015).